Two weeks ago, kieranklaassen ran strings on Claude Code’s binary and found TeammateTool: a fully-implemented multi-agent orchestration system. 13 operations. Directory structures. Environment variables. Feature-flagged off.

That post became the most-shared piece on this blog. The community wanted this.

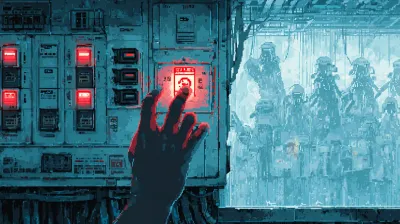

Today, as part of the Opus 4.6 launch, Anthropic flipped the switch. TeammateTool is now “agent teams.” One environment variable:

export CLAUDE_CODE_EXPERIMENTAL_AGENT_TEAMS=1The official docs are thorough. Here’s what they reveal about what shipped, what we got right, and what surprised me.

What We Predicted vs What Shipped

The hidden swarm post mapped out 13 operations across three categories: team lifecycle, coordination, and graceful shutdown. The official release kept all of it, but the product surface is cleaner than the raw schema suggested.

Confirmed:

- Team lead + teammates architecture: One session coordinates, others work independently. Each teammate gets its own context window, loads project CLAUDE.md and MCP servers, but doesn’t inherit the lead’s conversation history.

- Shared task list:

~/.claude/tasks/{team-name}/with pending, in-progress, and completed states. File locking prevents race conditions when multiple teammates claim simultaneously. - Peer-to-peer messaging: Direct messages between teammates, not just reporting back to the lead. Plus broadcast for all-team communication.

- Plan approval workflow: Teammates can be required to plan before implementing. The lead reviews and approves or rejects with feedback.

- Directory structure:

~/.claude/teams/{team-name}/config.jsonwith member arrays. Almost exactly what the binary strings revealed.

New (not in the hidden schema):

- Display modes: In-process (all teammates in one terminal, Shift+Up/Down to navigate) and split-pane (each teammate in its own tmux/iTerm2 pane). The split-pane mode is the headline UX feature.

- Delegate mode: Press Shift+Tab to restrict the lead to coordination-only tools. No coding, just spawning, messaging, and task management. Solves the problem of the lead implementing tasks itself instead of delegating.

- Task dependencies: Tasks can block other tasks. Completing a dependency auto-unblocks downstream work.

- Self-claiming: Teammates finish a task and pick up the next unassigned, unblocked one autonomously.

Subagents run within a single session and only report back to the caller. Agent teams are fully independent Claude Code instances that message each other directly. Use subagents when only the result matters. Use agent teams when agents need to discuss, challenge, and coordinate.

The Patterns That Matter

The docs include use case examples that reveal how Anthropic thinks about multi-agent work. Two stand out.

Competing Hypotheses

This is the most interesting pattern:

Users report the app exits after one message instead of staying connected.

Spawn 5 agent teammates to investigate different hypotheses. Have them talk

to each other to try to disprove each other's theories, like a scientific

debate. Update the findings doc with whatever consensus emerges.The docs explain why: “Sequential investigation suffers from anchoring: once one theory is explored, subsequent investigation is biased toward it. With multiple independent investigators actively trying to disprove each other, the theory that survives is much more likely to be the actual root cause.”

This is genuinely novel. Not parallel execution of the same type of work. Adversarial reasoning across isolated contexts. Each agent forms an independent opinion without being anchored by what another agent already found.

Parallel Code Review

Split review criteria into independent domains: security, performance, test coverage. Each reviewer applies a different lens to the same PR. The lead synthesizes findings.

This maps directly to the Gastown pattern: operational roles, not SDLC personas. The agents aren’t “Security Analyst” and “Performance Engineer” as role-playing prompts. They’re the same model with different task scopes.

What’s Still Rough

The limitations section is refreshingly honest:

- No session resumption:

/resumedoesn’t restore teammates. After resuming, the lead may try to message agents that no longer exist. You have to spawn fresh ones. - One team per session: Clean up the current team before starting a new one. No nested teams either.

- Lead is fixed: Can’t promote a teammate to lead or transfer leadership mid-session.

- Task status can lag: Teammates sometimes fail to mark tasks as completed, blocking dependent work. Manual intervention required.

- Split panes are limited: Requires tmux or iTerm2. No VS Code integrated terminal, no Windows Terminal, no Ghostty.

- Permissions inherit: All teammates start with the lead’s permission mode. No per-teammate permissions at spawn time.

Agent teams use “significantly more tokens than a single session.” Each teammate is a separate Opus instance. Three teammates on a complex task could burn through $50-100+ in tokens quickly. The docs acknowledge this: “For routine tasks, a single session is more cost-effective.”

The Gastown Validation

In the hidden swarm post, I compared TeammateTool’s architecture to Gastown’s operational roles. The official release validates that comparison almost exactly:

| Gastown | Agent Teams |

|---|---|

| Mayor orchestrates | Team lead coordinates |

| Polecats execute in parallel | Teammates work independently |

| Witness/Deacon monitor | Lead monitors + teammates self-report |

| Beads for external state | Shared task list with file locking |

| Git worktrees for isolation | Separate context windows per teammate |

The key difference: scope. Gastown runs 20-30 instances. Agent teams’ docs suggest 2-5 teammates with “5-6 tasks per teammate” as the sweet spot. Anthropic chose restraint where Yegge chose ambition. Given the 19-agent trap, I think that’s the right call.

The community tools that preceded this (claude-flow, ccswarm, oh-my-claudecode) each solved pieces of the puzzle. Agent teams slurps up the core patterns into a native implementation. This is what Anthropic does now: watch the community prove a concept, then ship it as a first-party feature. The scaffolding era keeps ending.

The Proof: A C Compiler From Scratch

Anthropic didn’t just ship docs. Nicholas Carlini from their Safeguards team built a production C compiler using 16 parallel Claude agents over two weeks. 100,000 lines of Rust. Compiles Linux 6.9 on x86, ARM, and RISC-V. 99% pass rate on GCC torture tests. Compiles QEMU, FFmpeg, SQLite, PostgreSQL, Redis, and Doom.

Cost: $20,000 in API calls. Two billion input tokens. 140 million output tokens.

The architecture mirrors agent teams exactly: lock files in current_tasks/ prevent collisions, git handles merging across parallel work streams, each agent runs in isolated Docker containers. Agents finish a task, pick up the next one. As Carlini puts it: “Claude has no choice. The loop runs forever.”

— Nicholas Carlini, AnthropicIt’s important that the task verifier is nearly perfect, otherwise Claude will solve the wrong problem.

The key insight: when agents got stuck on the monolithic Linux kernel compilation, Carlini introduced GCC as a “known-good oracle,” letting agents isolate and fix different bugs independently. Same competing hypotheses pattern from the docs, applied to compiler correctness.

Carlini ran 16 agents. Agent teams’ docs suggest 2-5. The compiler project had a perfect test harness (GCC torture tests) that made parallelism safe. Most codebases don’t. The docs’ restraint is pragmatic: scale when you have verification, stay lean when you don’t.

What This Completes

This is the third piece of a story that played out in two weeks:

- Discovery (Jan 26): The community found TeammateTool. Feature-flagged off. “We built it and turned it off.”

- Launch (Feb 6): Opus 4.6 ships with agent teams as a headline feature.

- Architecture (this post): The official docs reveal the full design, and it matches what the community predicted.

The speed is what’s notable. From “hidden in the binary” to “officially documented” in 11 days. Either Anthropic accelerated the launch because the community forced their hand, or the timeline was always this tight and we just caught the pre-release.

Either way, the pattern holds: community proves demand, Anthropic productizes. Beads became Tasks. Gastown became agent teams. The question is what the community builds next that Anthropic hasn’t shipped yet.

— Hidden swarm post, January 26The future of AI coding tools is multi-agent by default. Anthropic knows it. They just haven’t told us yet.

Now they have.