Google released Gemini 3 on November 18 (US time), and for the first time in months, we have an unambiguous #1 model. Not a tight horse race. Not “it depends on the task.” It wins on every benchmark I can find, and users are reporting it matches the hype.

The “AI has hit a wall” crowd was wrong.

Why #1 Matters

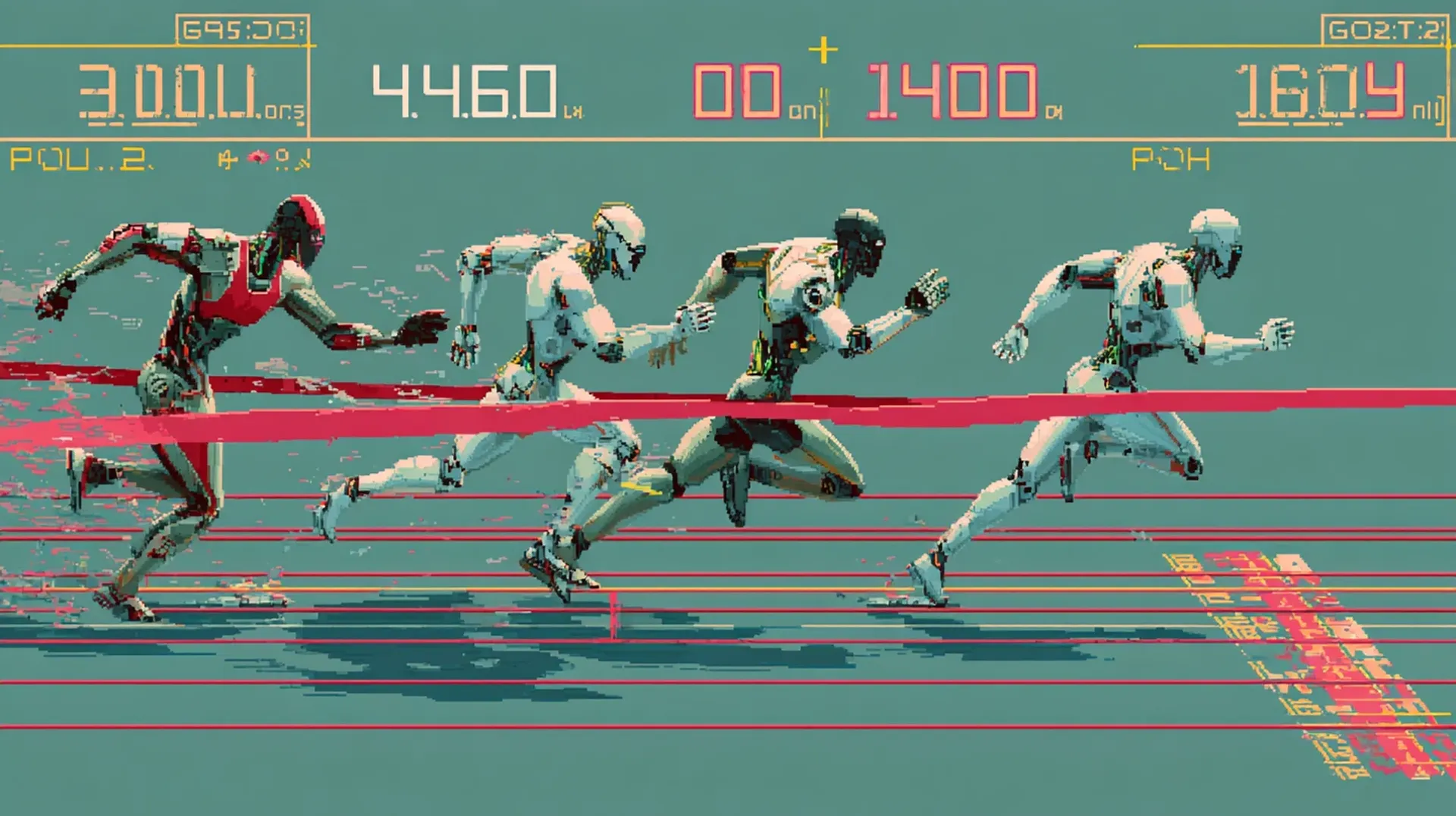

We’ve been in a neck-and-neck race between Claude, GPT, Gemini, and Grok for a while now. Each model had strengths, but none dominated across the board. That made it easy to believe progress was slowing, that we’d hit diminishing returns on scaling.

xAI’s Grok 4.1 dropped on November 17 and briefly claimed the top spot on LMArena (1483 Elo). Hours later, Gemini 3 surpassed it at 1501 Elo. (Elo is a rating system adapted from chess that ranks models based on head-to-head human preference comparisons.)

But here’s what makes Gemini 3 unambiguous: it doesn’t just win on one leaderboard. It wins across visual understanding, reasoning, coding, and factual accuracy benchmarks. Grok 4.1 topped one chart. Gemini 3 swept the board.

Leaderboard: First to cross 1500 Elo on LMArena (1501 vs Grok 4.1’s 1483, Claude’s ~1450, GPT’s ~1440)

Visual understanding: 72.7% ScreenSpot-Pro (real screen understanding) vs Claude 36.2%, GPT 3.5%. Also leads: 81% MMMU-Pro, 87.6% Video-MMMU.

Reasoning: 37.4% Humanity’s Last Exam (GPT-5 Pro: 31.64%), 31.1% ARC-AGI-2 (abstract puzzles)

Coding: 76.2% SWE-bench Verified (real software tasks), 54.2% Terminal-Bench 2.0 (operating computers via terminal)

Factual accuracy paradox: 72.1% SimpleQA (best) BUT 88% hallucination rate per Artificial Analysis (worst among top models)

This isn’t incremental improvement. This is a several-length lead opening up mid-race.

Where Gemini 3 Actually Wins

The benchmark sweep tells a story: Gemini 3 dominates tasks requiring both vision and reasoning.

Gemini has led in visual understanding since the beginning. Even Flash-Lite models punched above their weight here. Gemini 3 extends that lead dramatically: the ScreenSpot-Pro gap (72.7% vs Claude’s 36%, GPT’s 3.5%) shows it understands real screens at a level competitors can’t match.

Combine that with improvements in reasoning, math, and agentic coding, and you get a model that excels at complex, multi-step work. The vision dominance isn’t new, but the gap is.

— Cole Medin, AI educatorYou’ve got a really smart colleague that can’t really take your job but can help you get more done faster. And that colleague keeps getting smarter all the time.

Vibe Coding and Generative UI

Google introduced “vibe coding” alongside Gemini 3: describe an end goal in natural language, and the model assembles the interface or code needed to get there. No syntax required beyond conversational prompts.

This shipped into Google Search on day one (a first for any Gemini release). AI Mode now dynamically generates custom visual layouts: interactive simulations for physics problems, loan calculators built on the fly, grids and tables tailored to your query.

The technical term is “generative UI,” but the practical implication is that the model can now create the best presentation format for any answer instead of being locked into text-only responses.

Gemini 3 Pro is available now in Google AI Studio and Vertex AI at $2/million input tokens, $12/million output tokens (200K context). Free in AI Studio with rate limits during preview. Third-party platforms like Cursor, GitHub, JetBrains, and Replit are already integrating it.

Deep Think: Extended Reasoning

Gemini 3 Deep Think joins the wave of reasoning models (GPT-5.1 Thinking, Claude Extended Thinking, Grok Think mode, DeepSeek R1). It takes time to “think” before responding, designed for PhD-level complexity.

Benchmark results:

- 41% on Humanity’s Last Exam (vs 37.4% for standard Gemini 3 Pro)

- 45.1% on ARC-AGI-2 with code execution

- 93.8% on GPQA Diamond (graduate-level science questions)

Deep Think is currently in safety testing, coming to Google AI Ultra subscribers in the coming weeks.

Google Antigravity: The Agentic Platform

Google also launched Antigravity, an agentic development platform where you act as architect and agents autonomously plan and execute software tasks across editor, terminal, and browser.

Technically, it’s another VS Code fork (like Cursor, Windsurf). Leading the project is Varun Mohan, former Windsurf CEO who joined Google DeepMind in July 2025 as part of a $2.4B talent acquisition. OpenAI had tried to acquire Windsurf for $3B, but the deal collapsed due to tensions with Microsoft. Google swooped in, hired Mohan and key R&D staff, and licensed Windsurf’s tech. Windsurf continues independently under new leadership and was later acquired by Cognition (Devin’s makers) for $250M.

The architecture is different from other forks: three-surface design with an agent manager dashboard, traditional editor, and deep Chrome browser integration. Agents work asynchronously across workspaces, validate their own code, and produce “artifacts” (task lists, plans, screenshots, recordings) that are easier to verify than raw tool calls.

Free during public preview (MacOS, Windows, Linux). Powered by Gemini 3, Claude Sonnet, and GPT-OSS models.

Early feedback from Derek Nee (CEO of Flowith): stronger visual understanding, better code generation, improved performance on long tasks. But he’s cautious: “We need deeper testing to understand how far it can go.”

What Gemini 3 Doesn’t Solve

Let’s be clear about limitations:

- Casual tasks: You won’t notice Gemini 3’s advantages for simple questions, planning events, or writing one-pagers

- Human judgment: The model doesn’t handle ambiguity, stakeholder management, tough calls, or creative leaps that humans thrive at

- The 2.5 Pro track record: Gemini 2.5 Pro had serious production issues. 40% of users reported critical workflow disruptions. Developers complained it introduced bugs, ignored instructions, changed variable names randomly, and made up solutions with complete confidence. Response quality degraded over time.

- The hallucination paradox: Gemini 3 scores 72.1% on SimpleQA (best factual accuracy), but Artificial Analysis reports an 88% hallucination rate (higher than GPT/Claude). When it’s right, it’s very right. When it’s wrong, it confidently hallucinates instead of saying “I don’t know.”

- Too early to trust for production: Model launched hours ago. Benchmarks look great, but real-world reliability takes time to assess. Don’t bet your codebase on day-one hype.

Gemini 2.5 Pro looked great on benchmarks but had reliability issues in production (bugs, hallucinations, instruction-following failures). Gemini 3’s hallucination rate is concerning despite accuracy improvements. Wait for real developer feedback before using it for critical work.

The areas where Gemini 3 improved (visual acuity, reasoning, coding) are fantastic. But this isn’t AGI, and it won’t replace your job tomorrow. And based on the 2.5 Pro experience, approach production use cautiously.

What This Means for Trajectories

Three takeaways:

-

Big jumps are still possible: We thought the race was tight and would stay tight. Gemini 3 proves you can still open up a formidable lead. Don’t get lulled into complacency.

-

Rethink capabilities every few months: Every advance in state-of-the-art expands the surface area of workflows AI can cover. Gemini 3 expanded that surface area meaningfully, especially for agentic and visual tasks.

-

Multimodal is becoming real: The biggest leaps are in areas where the model needs to both see and think. Visual understanding that doesn’t feel like a weak spot unlocks workflows we couldn’t reliably automate before.

Assume it will get better. AI will continue to cover more workflows. But that doesn’t mean it covers all workflows or replaces human judgment.

Try It Yourself

Focus on complex work: debugging distributed systems, navigating visual interfaces, multi-step reasoning tasks, agentic coding workflows. That’s where you’ll see the difference.

For simple tasks, you won’t notice much. For hard tasks, the gap is striking.

The bar just moved. Again.